Assignment 1: Build Your Own LLaMa

Type: Individual Assignment

Released: Wednesday, January 28

Due: Thursday, February 12 at 11:59 PM

Summary

In this assignment, you will implement core components of the Llama2 transformer architecture to gain hands-on experience with modern language modeling. You will build a minimalist version of Llama2 and apply it to text continuation and sentence classification tasks.

- Collaboration Policy: Please read the collaboration policy here: https://cmu-l3.github.io/anlp-spring2026/

- Late Submission Policy: See the late submission policy here: https://cmu-l3.github.io/anlp-spring2026/

- Submitting your work: You will use Canvas to submit your implementation files and output results. Please follow the submission instructions carefully to ensure proper grading. See the submission guidelines here: https://github.com/ialdarmaki/anlp-spring2026-hw1

1. Overview

This assignment focuses on implementing core components of the Llama2 transformer architecture to give you hands-on experience with modern language modeling techniques. You will build a mini version of Llama2 and apply it to text continuation and sentence classification tasks. You will also be responsible for implementing additional modules.

The assignment consists of two main parts:

Part 1: Core Implementation. In this part, you will complete the missing components of the Llama2 model architecture. Specifically, implement:

- Attention mechanisms

- Feed-forward networks

- Layer normalization (LayerNorm)

- Positional encodings (RoPE)

Part 2: Applications. After completing the core implementation, apply your model to the following tasks:

- Text Generation – generate coherent continuations for given prompts.

- Zero-shot Classification – classify inputs without task-specific fine-tuning.

- Addition pretraining – update modules so Llama2 can compute addition problems.

2. Understanding Llama2 Architecture

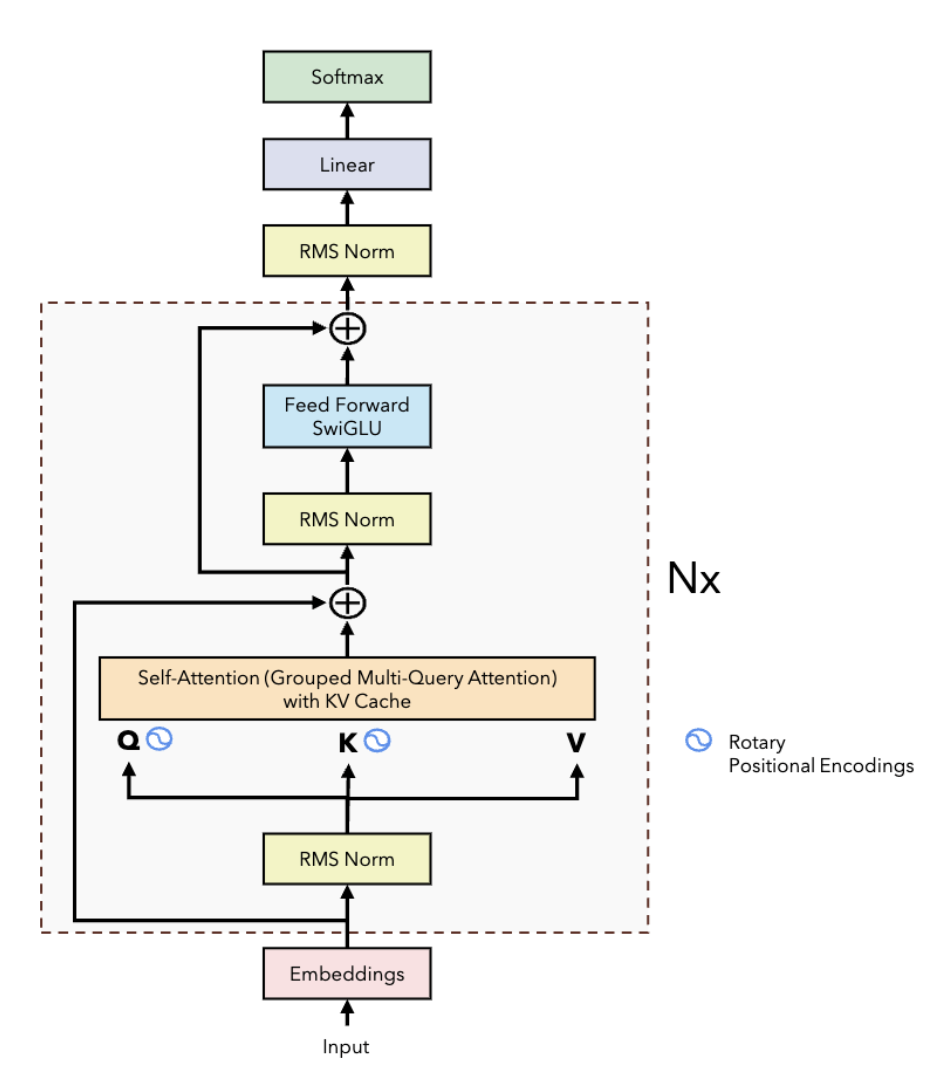

Figure 1: LLaMA Architecture Overview showing the transformer-based structure with RMSNorm pre-normalization, SwiGLU activation function, rotary positional embeddings (RoPE), and grouped-query attention (GQA).

Sources:

PyTorch LLaMA Implementation Notes and

Llama 2: Open Foundation and Fine-Tuned Chat Models

Llama2 is a transformer-based language model that improves on the original Llama architecture with enhanced performance and efficiency. Its key features include RMSNorm pre-normalization, SwiGLU activation, rotary positional embeddings (RoPE), and grouped-query attention (GQA). In this assignment, you will implement several of these components, which are explained below.

In this assignment, you will implement the following core components:

1. LayerNorm (Layer Normalization)

In this assignment, we will use LayerNorm instead of RMSNorm (which is used in Llama2). You will implement LayerNorm in llama.py. This normalization operates across features for each data point, reducing internal covariate shift, stabilizing gradients, and accelerating training.

Here, āi is the normalized input, g is a learnable gain, and μ, σ are the mean and standard deviation of inputs a:

LayerNorm makes activations independent of input scale and weights to improve stability.

2. Scaled Dot-Product Attention and Grouped Query Attention

Scaled Dot-Product Attention is the fundamental building block of the Transformer architecture (Vaswani et al., 2017). It computes attention scores by comparing queries (Q) with keys (K). These scores determine how much focus each token should place on other tokens, and are then used to weight the values (V). To prevent large dot products from destabilizing training, the scores are scaled by the square root of the key dimension before applying the softmax function:

In this assignment, you will implement the scaled dot-product attention mechanism in the function

compute_query_key_value_scores within llama.py.

The diagram below (Figure 2a) shows the high-level process: Q and K are multiplied, scaled,

optionally masked (to prevent attending to certain positions), and normalized with softmax

before being applied to V.

In self-attention, the same input embeddings X are projected into queries (Q), keys (K), and values (V) using learned weight matrices (WQ, WK, WV). These separate projections allow the model to learn different views of each token depending on whether it is acting as a query, a key, or a value. Once Q, K, and V are obtained, the self-attention mechanism compares every query with all keys to produce attention scores, which are then used to weight the corresponding values.

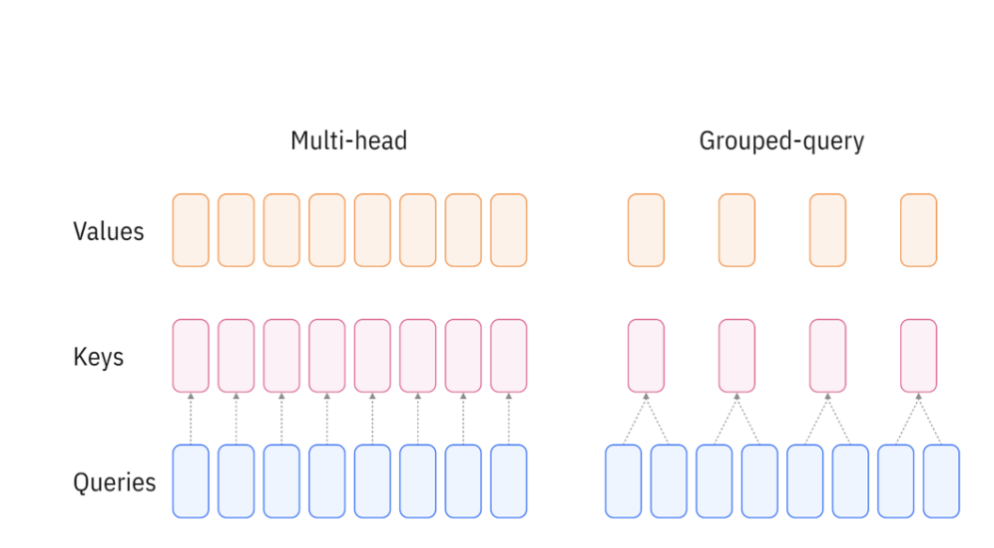

In practice, a single self-attention head may not capture all types of relationships in a sequence. To address this, Multi-Head Attention (MHA) runs several independent self-attention operations in parallel and concatenates their results. This allows the model to attend to information from multiple subspaces simultaneously, greatly enriching its representations. However, computing full attention for many heads becomes costly at scale. Grouped Query Attention (GQA) was introduced to reduce this overhead (Ainslie et al., 2023). Instead of giving each query its own key-value pair, multiple queries are grouped together and share the same K and V projections. Figure 2b shows how GQA works

3. RoPE Embeddings (Rotary Positional Embeddings)

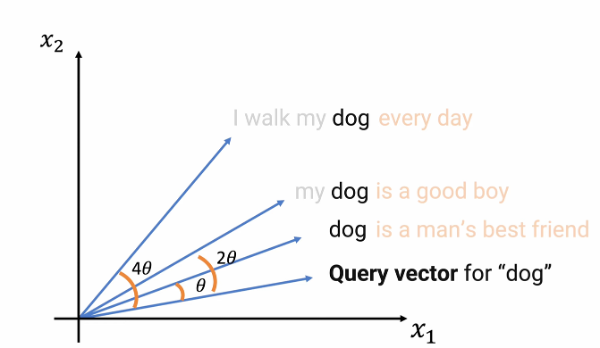

Rotary Positional Embeddings (RoPE) are currently used in LLMs such as Llama. The technique employs a rotation matrix with a hyperparameter theta to rotate key and query vectors based on their position in a sequence, encoding positional information by rotating token embeddings in 2D space. In this assignment, you will implement RoPE in the function apply_rotary_emb within rope.py.

Figure 3a: RoPE Overview showing the rotary positional embedding technique for the word "dog" in different positions. Figure from How Rotary Position Embedding Supercharges Modern LLMs..

As shown in the diagrams, the word "dog" is rotated based on its position. For example, in the sentence "I walk my dog every day," it is rotated by 4θ; in "my dog is a good boy," by 2θ; and in "dog is a man's best friend," by θ. In general, a rotation transformation is applied to query and key vectors before computing attention scores.

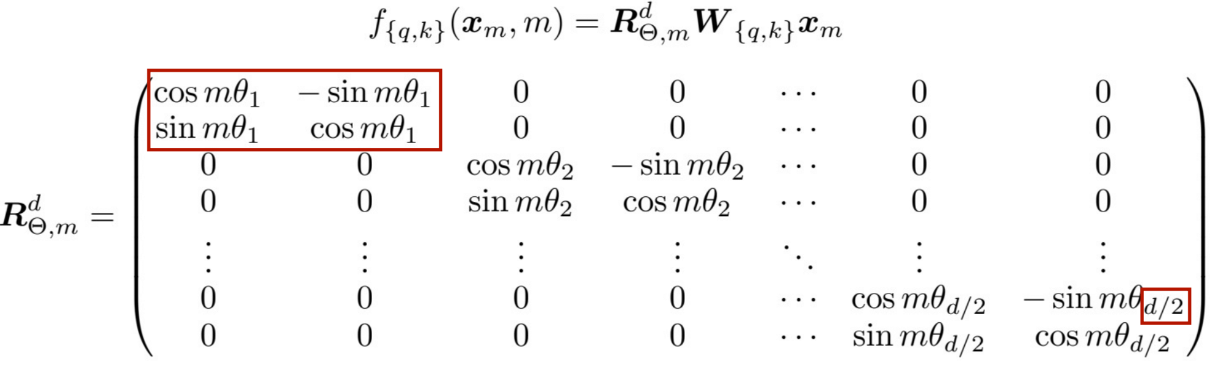

Figure 3b: Rotation Matrix for Rotary Positional Embedding.

The rotation matrix, though complex-looking, applies different theta values to each pair of dimensions, which are then combined. This hyperparameter theta allows RoPE to extend sequence length post-training, enabling the use of longer sequences during inference by simply rotating the key and query vectors.

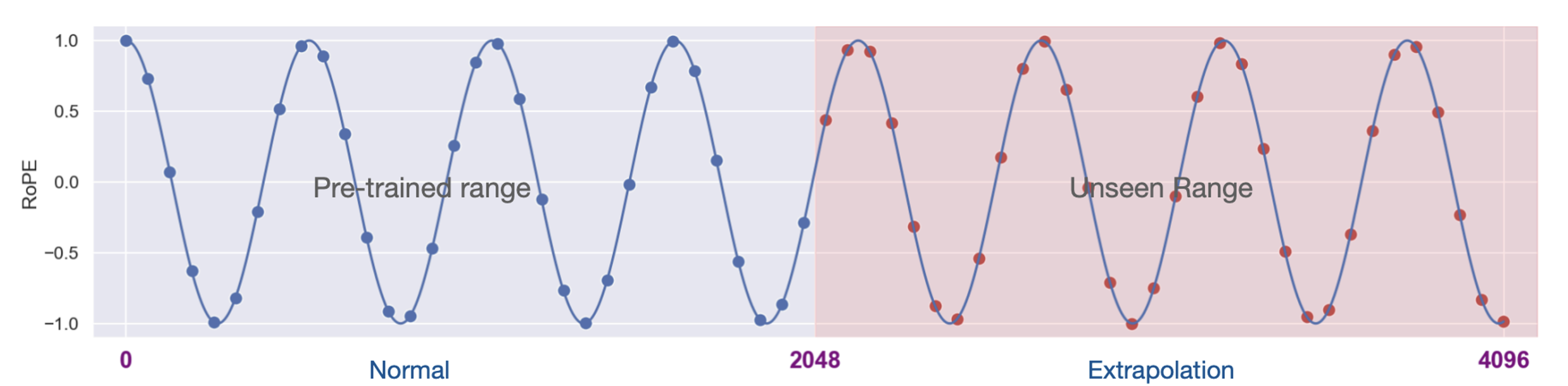

Figure 3c: RoPE extrapolation for unseen positions.

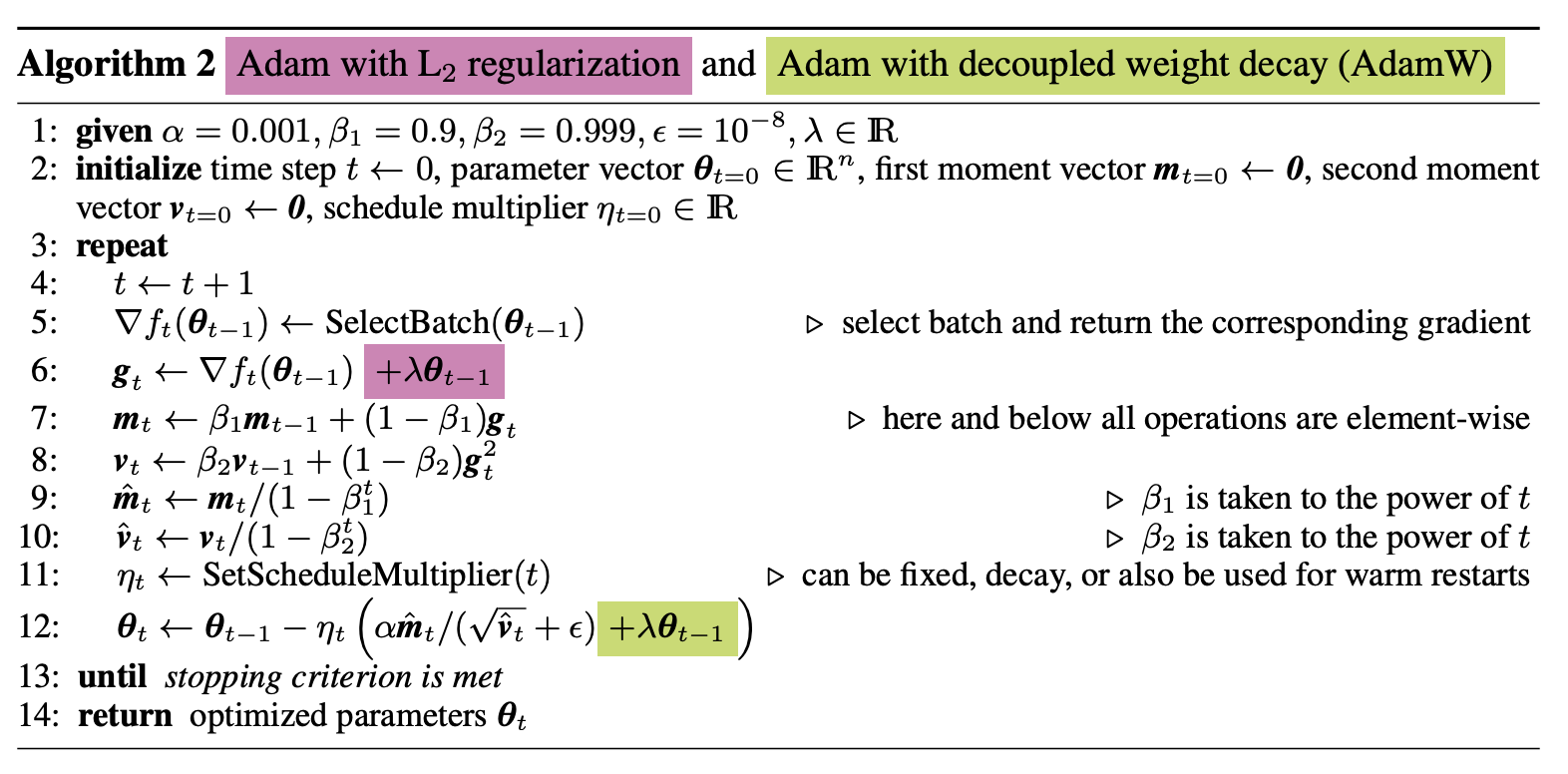

4. AdamW Optimizer

AdamW is an improved version of the Adam optimizer that decouples weight decay from gradient-based updates,

leading to better regularization and improved generalization performance in transformer models. This optimizer

is widely used in training large language models due to its superior convergence properties. In this assignment, you will implement the AdamW algorithm (Algorithm 2 in Loshchilov & Hutter, 2017, shown below)

inside the optimizer.py.

For detailed explaination, mathematical formulations and pseudocode, refer to the referenced papers.

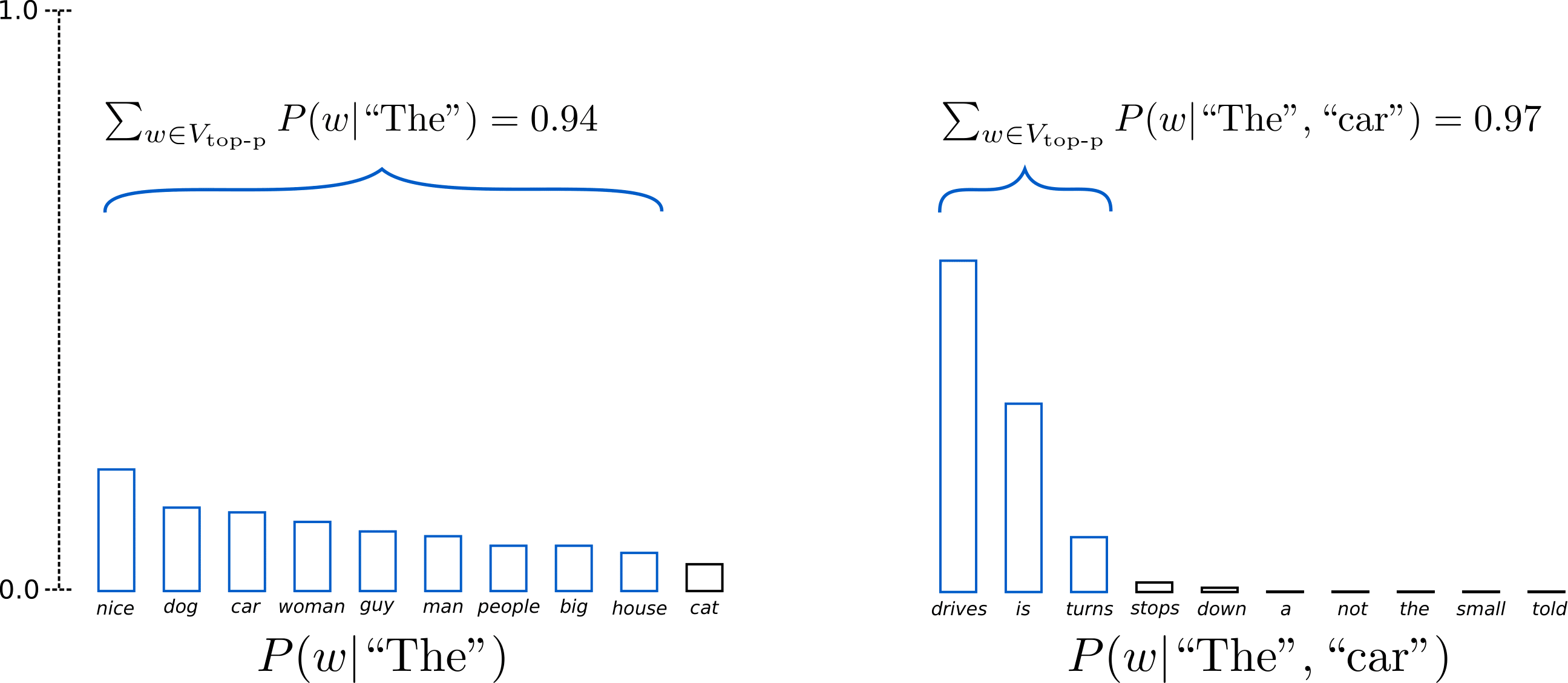

5. Top-p (nucleus) Sampling Decoding

Top-p sampling is a text generation strategy that filters out tokens with probability below a fixed threshold (p), that selects the smallest set of tokens whose combined probability exceeds a threshold (p), and then samples from this smaller subset. This approach provides a simple yet effective way to balance diversity

and quality in generated text by removing very low-probability tokens that might lead to incoherent outputs. You will implement top-p (nucleus) sampling in the function generate within llama.py.

Figure 6: Top-p Sampling Overview showing the advanced decoding technique for text generation. Figure from How to generate text: using different decoding methods for language generation with Transformers

3. Tasks and Datasets

After implementing the core components from Part 1 (attention mechanisms, feed-forward networks, layer normalization, RoPE, and optimizer), you will test your model across three main tasks: (1) text continuation where you'll complete prompts to produce coherent continuations, (2) sentiment classification on two movie review datasets - the Stanford Sentiment Treebank (SST) for 5-class sentiment analysis of single sentences from movie reviews, and CFIMDB for binary sentiment classification of full movie reviews, and (3) evaluation across two settings - zero-shot prompting (using the pretrained model directly with crafted prompts), -addition prompting (training a new mini-llama model to answer addition questions). You'll measure performance through classification accuracy on the sentiment tasks.

Implementation Details

For detailed instructions on how to run these tasks, including setup procedures, command-line arguments, and execution steps, please refer to the GitHub repository for this assignment: https://github.com/ialdarmaki/anlp-spring2026-hw1/tree/main. The repository contains comprehensive documentation and example scripts to help you successfully complete each task.

4. Advanced Methods

The A+ grade is reserved for the top 5% of students who explore the boundaries of training and demonstrate a strong understanding of model capacity through systematic experimentation. You must perform multiple ablation studies and use them to identify the tiniest LLaMA architecture that achieves 100% accuracy on the test set, while remaining within the specified model capacity constraint. You are required to submit at least 5 different ablations, as well as your best-performing (smallest) model, with each experiment saved in a separate directory as instructed. Ensure that all experiments are executable using the provided commands. You must plot the results of all experiments and save training logs for each run. Submissions that do not include the required ablations, plots, and logs will not be eligible for an A+.

5. Submission Instructions and Grading

For comprehensive submission instructions and grading criteria, please refer to the assignment GitHub repository.